Please you should not forget about to be part of our ML Subreddit

Envision becoming in a position to convey to a machine to publish an app just by telling it what the application does. As significantly-fetched as it may appear, this situation is currently a truth.

According to Salesforce AI Analysis, conversational AI programming is a new paradigm that brings this vision to lifestyle, many thanks to an AI process that builds software.

Introducing CodeGen: Generating Packages from Prompts

The huge-scale language product, CodeGen, which converts very simple English prompts into executable code, is the very first move towards this goal. The individual doesn’t produce any code rather, (s)he describes what (s)he needs the code to complete in standard language, and the computer system does the rest.

Conversational AI refers to systems that enable a human and a laptop or computer to engage normally as a result of a dialogue. Chatbots, voice assistants, and digital agents are illustrations of conversational AI.

A Different Type of Coding Dilemma: Finding out a New Language

Up until now, there have been two methods to get personal computers to do beneficial get the job done –

- use pre-present laptop or computer courses that do what you want the equipment to do

- generate a new program to do it.

Possibility 1 is wonderful when the personal computer courses that are wanted are available.

But Option 2 has a created-in barrier: if the kind of application essential does not exist, creating new packages has often been limited to all those who can talk the computer’s language.

Below are 3 of the current programming paradigm’s essential drawbacks:

- Time-consuming: just one need to study a programming language and the right way implement what they’ve figured out.

- Challenging: some men and women locate studying a new language to be a difficult undertaking, and other people are unsuccessful to total the teaching.

- Expensive: Coding colleges are rather costly.

These concerns usually impede or discourage new programmers’ education and learning and improvement, specially amid persons from traditionally deprived communities. To place it yet another way, conventional programming usually provides folks with a unique form of “code difficulty” — one that isn’t posed on a take a look at but somewhat a formidable actual-earth problem that many men and women can not defeat.

The CodeGen Strategy: Make Coding as Straightforward as Speaking

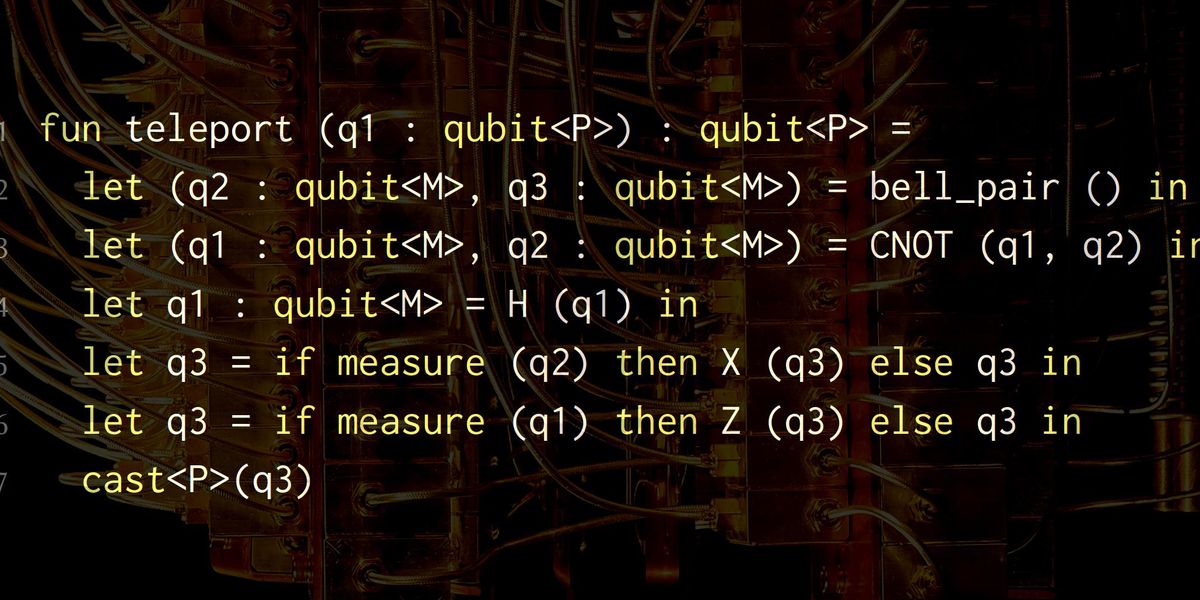

CodeGen will make programming as simple as speaking, which is the terrific guarantee of conversational AI programming. The conversational AI programming implementation provides a glimpse into the upcoming of democratizing program engineering for the standard general public. An “AI assistant” converts English descriptions into usable Python code – enabling any individual to write code, even if they have no programming experience. This conversational paradigm is enabled by the fundamental language model, CodeGen, which will be produced open resource to pace up research.

CodeGen’s Two Faces: For Non-Coders and Programmers alike

Though everyone, including non-coders, can use CodeGen to develop the application from scratch, it can advantage in