(227 – 231, n=2)

(132.3 – 173.7, n=4)

(115.2 – 119.4, n=4)

The Nationwide Science Foundation just designed a $650,000 guess that a College of Alabama in Huntsville team can make one of the world’s hottest new pc components far more resilient, sturdy and strength-productive than the challenging disk drives the new tech is speedily replacing.

The NSF has awarded Dr. Biswajit Ray a five-yr, $650,000 “Faculty Early Career Improvement Program (Profession) award to go after his investigation. Ray and his staff of doctoral learners and graduate researchers are performing on the challenge now.

Reliable point out drives are rapidly and effective storage drives for laptop knowledge. Ray’s analysis aims to create storage administration procedures that can double their lifetimes, UAH said this week.

SSD’s retail outlet facts in flash memories, and the technology in people reminiscences is also quickly evolving. “I discover latest technological developments very fascinating,” Ray said in the university’s release. “Manufacturers are releasing chips that can hold about 1.33 terrabits of information and facts, and these chips are a few-dimensional constructions with storage cells positioned in many levels.”

Problems with the new drives involve power effectiveness, security and privacy, Ray reported. “The project addresses these troubles by means of intelligent memory administration strategies which can be carried out as a result of computer software-based mostly methods in the storage firmware,” he suggests. “We will appraise these tactics on point out-of-the-artwork-flash memory chips.”

What could this sort of storage allow in the foreseeable future? Better predictive analytics and synthetic intelligence in minimal-end computer systems capable of functioning in “extreme environments, these types of as nuclear environments and space, wherever flash drives are eye-catching because of to their mild body weight, significant density and small measurement,” the announcement said.

Ray claimed his team’s work will make it possible for procedure designers to build new memory administration functions “that go over and above the algorithmic techniques” to evaluate and optimize memory performance. Two businesses are currently interested.

“I have a partnership with Western Electronic and Infineon Systems,” Ray claimed. “They are quite a lot thrilled on the end result of the task. They will deliver complex mentorship and associated assets for the achievement of the challenge.”

The three doctoral students functioning in Ray’s Components Trustworthiness and Security Laboratory are Matchima Buddhanoy, Md Raquibuzzaman and Umeshwarnath Surendranathan.

Perhaps the second most famous law in electronics after Ohm’s law is Moore’s law: the number of transistors that can be made on an integrated circuit doubles every two years or so. Since the physical size of chips remains roughly the same, this implies that the individual transistors become smaller over time. We’ve come to expect new generations of chips with a smaller feature size to come along at a regular pace, but what exactly is the point of making things smaller? And does smaller always mean better?

Over the past century, electronic engineering has improved massively. In the 1920s, a state-of-the-art AM radio contained several vacuum tubes, a few enormous inductors, capacitors and resistors, several dozen meters of wire to act as an antenna, and a big bank of batteries to power the whole thing. Today, you can listen to a dozen music streaming services on a device that fits in your pocket and can do a gazillion more things. But miniaturization is not just done for ease of carrying: it is absolutely necessary to achieve the performance we’ve come to expect of our devices today.

One obvious benefit of smaller components is that they allow you to pack more functionality in the same volume. This is especially important for digital circuits: more components means you can do more processing in the same amount of time. For instance, a 64-bit processor can, in theory, process eight times as much information as an 8-bit CPU running at the same clock frequency. But it also needs eight times as many components: registers, adders, buses and so on all become eight times larger. So you’d need either a chip that’s eight times larger, or transistors that are eight times smaller.

The same thing holds for memory chips: make smaller transistors, and you have more storage space in the same volume. The pixels in most of today’s displays are made of thin-film transistors, so here it also makes sense to scale them down and achieve a higher resolution. However, there’s another, crucial reason why smaller transistors are better: their performance increases massively. But why exactly is that?

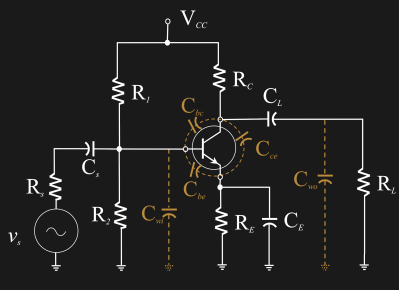

Whenever you make a transistor, it comes with a few additional components for free. There’s resistance in series with each of the terminals. Anything that carries a current also has self-inductance. And finally, there’s capacitance between any two conductors that face each other. All of these effects eat power and slow the transistor down. The parasitic capacitances are especially troublesome: they need to be charged and discharged every time the transistor switches on or off, which takes time and current from the supply.

The capacitance between two conductors is a function of their physical size: smaller dimensions mean smaller